Bridging Sensing, Planning and Interaction

Active Perception Workshop — a great success! One of the most attended workshops at IROS 2025. Thank you to all speakers, authors, attendees and sponsors. Below are a few photos from the workshop. The Active Localization Challenge continues — learn more here and consider participating.

Congrats to our awardees and finalists! Scroll down for the full recap and reach out if you would like to share additional media.

We will soon share recording of the workshop sessions. Stay tuned!

Estimated workshop audience ~350 people

Keynotes on stage

Lively poster session

We recognized three outstanding papers as Best Paper finalists, with a special Best Presentation Award announced during the closing session.

| Award | Paper | |

|---|---|---|

| Best Paper Finalist and Best Presentation Award | Scalable and Expert-Guided Reconstruction for Robotic Exploration | Download |

| Best Paper Finalist | RAVEN: Resilient Aerial Navigation Through Vision-Based Active Perception | Download |

| Best Paper Finalist | Source Term Estimation Using Mobile Robots in Turbulent Environments | Download |

| 08:25 – 08:35 | Welcome Remarks Organizing Committee |

| 08:35 – 09:00 | Sebastian Scherer (CMU) – remote Plenary Talk: Multi-Robot Information Gathering in Challenging Environments |

| 09:00 – 09:25 | Tai Wang (Shanghai AI Lab) Plenary Talk: Towards a Vision-Language Navigation Foundation Model via Sim2Real |

| 09:25 – 09:50 | Marija Popovic (TU Delft) Plenary Talk: Reinforcement Learning for Active Perception using UAVs |

| 09:50 – 10:10 | Spotlight Talks Presentations from selected award finalists |

| 10:10 – 10:50 | Coffee Break ☕ Poster Session |

| 10:50 – 11:15 | Boyu Zhou (SUSTech) Plenary Talk: Autonomous Exploration: From Traditional to AI-driven Approaches |

| 11:15 – 11:40 | Jianxiang Feng (Agile Robots) Plenary Talk: Learning Robust Perception and Manipulation via Uncertainty-Aware Intelligence |

| 11:40 – 12:05 | Stefan Leutenegger (ETH Zurich) Plenary Talk: Exploration with Drones for Geometric and Semantic Reconstruction in the Wild |

| 12:05 – 12:30 | Interactive Discussion Guided group discussions including invited speakers and organizers on “What’s Next in Active Perception” |

| 12:30 – 12:45 | Award & Closing Remarks Final remark by organization committe and award presentation |

Below is the list of accepted papers for the Active Perception Workshop.

| Title | Authors | Publication | |

|---|---|---|---|

| RISeg: Real-Time Model-Free Interactive Segmentation via Body Frame-Invariant Features | Howard H. Qian, Yiting Chen, Gaotian Wang, Podshara Chanrungmaneekul, Kaiyu Hang | IROS 2025 | Download |

| DAMM-LOAM: Degeneracy-Aware Mapping and Motion Estimation for Robust LiDAR Odometry | Nishant Chandna, Akshat Kaushal | ICAR 2025 | Download |

| Distributed Multi-Robot Multi-Sensor SLAM for Cooperative Active Perception | Jun Chen, Mohammed Abugurain, Philip Dames, Shinkyu Park | TRO 2025 | Download |

| Scalable and Expert-Guided Reconstruction for Robotic Exploration | Yuhong Cao, Yizhuo Wang, Jingsong Liang, Guillaume Adrien Sartoretti | Workshop paper | Download |

| Temporal Prior-Guided View Planning for Efficient 3D Scene Reconstruction | Sicong Pan, Xuying Huang, Maren Bennewitz | Workshop paper | Download |

| GauSS-MI: Gaussian Splatting for Mutual Information-Based Active Perception | Yuhan Xie, Yixi Cai, Yinqiang Zhang, Lei Yang, Jia Pan | RSS 2025 | Download |

| Efficient Manipulation-Enhanced Perception Using Uncertainty-Aware Control | Nils Dengler, Jesper Mücke, Rohit Menon, Maren Bennewitz | Humanoid 2025 | Download |

| Quality-Driven Next Best View Planning for Active 3D Perception | Benjamin Sportich, Kenza Boubakri, Olivier Simonin, Alessandro Renzaglia | Workshop paper | Download |

| RAVEN: Resilient Aerial Navigation Through Vision-Based Active Perception | Seungchan Kim, Omar Alama, Dmytro Kurdydyk, John Keller, Nikhil Varma Keetha, Wenshan Wang, Yonatan Bisk, Sebastian Scherer | Workshop paper | Download |

| Active Sensing for Target Tracking in Dynamic Environments | Sanjeev Ramkumar Sudha, Marija Popovic, Erlend M. Coates | Workshop paper | Download |

| RF-Based 3D Through-Wall Positioning for Autonomous Robotic Exploration | Rian Atri, Tianxi Liang | Workshop paper | Download |

| Source Term Estimation Using Mobile Robots in Turbulent Environments | Junhee Lee, Hongro Jang, Seunghwan Kim, Park HyoungHo, Hyungjin Kim, Changseung Kim, Hyondong Oh | Workshop paper | Download |

| Semantic GSL: Semantically Guided Gas Source Localization in Unknown Environments | Park HyoungHo, Hongro Jang, Seunghwan Kim, Junhee Lee, Jiwoo Kim, Hyondong Oh | Workshop paper | Download |

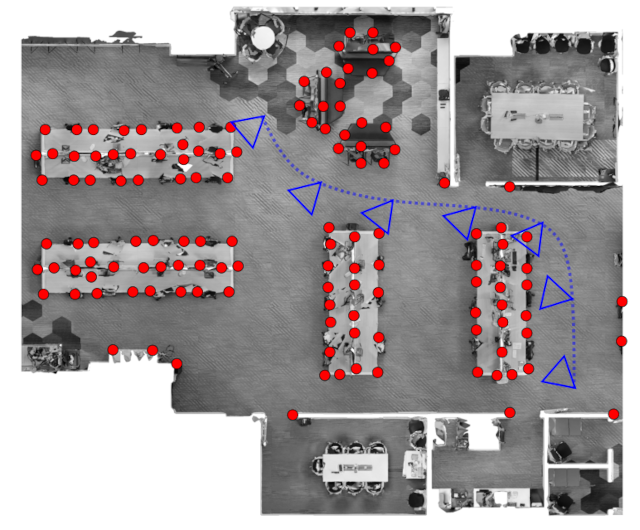

You are provided with a sparse map of the environment and assume to have a robot equipped with a camera that can freely rotate with respect to the mobile base. Given some robot waypoints, the goal is to rotate the camera towards more meaningful parts of the map, improving localization accuracy.